Okay, I know there are a lot of different explanations about how to describe these common components of networks (see, for instance, here, here, here, here, and here), but every once in a while I get a question about whether or not 40G will make things go “faster” in networks (often relating to FCoE and storage in general). Why write another one? I wanted to see how fast I could make this easy to understand.

Last night as I was trying to wind down to go to sleep, I had a brainstorm about an interesting visual (to me, at least) that might help explain some of the different concepts in moving data around. Your mileage may vary.

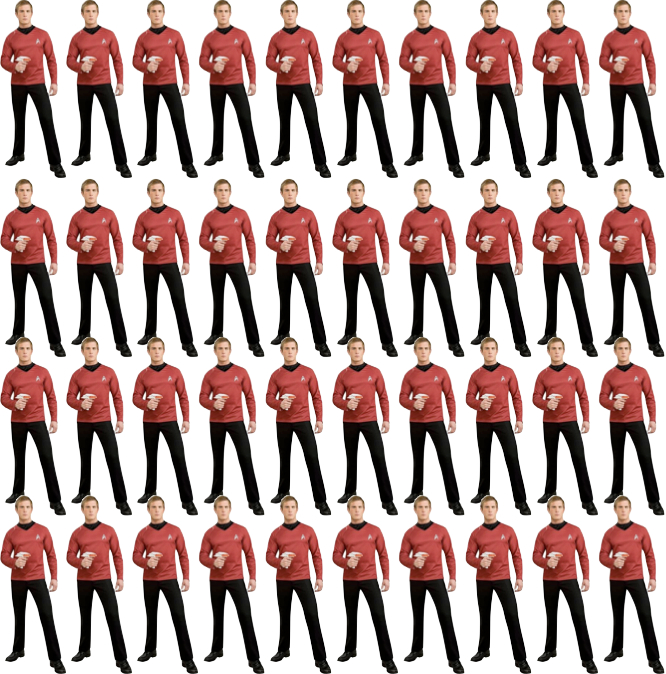

Let us suppose you are the transporter engineer for the USS Enterprise on Star Trek. The Enterprise has been sent to rescue a bunch of red shirts that have been left on a planet that is about to explode.

Now, it takes time to dematerialize a Red Shirt dude (who will promptly change his tunic as soon as he’s on board, now that he’s safe), send him through hyperspace, and then re-materialize him onto the transporter pad, and then prepare for the next Red Shirt. In networking terms, the time it takes to do this is analogous to latency. Let’s suppose for our example this takes one second.

Now, if you have a whole bunch of red shirts that need to come across that transporter and you can only bring them on one at a time (e.g., 1 red shirt per second, or 1 RSps), it’s going to take a long time. I mean, you have physics as well as the equipment limitations to deal with.

Now, if we increase the capability of your transporter pad so that you can do more than one at a time, from 1 Red Shirt to 10, we do this by increasing the amount of data that can be sent at the same time. In other words, you have now increased the total amount of bandwidth, because now we have more room on the stream for Red Shirts.

Now, keep in mind that we haven’t made the transporter faster, but we have increased the throughput. In other words, for the same amount of time (latency) it takes to send one Red Shirt we can now send 10 RSps.

So, continuing with our analogy, let us suppose that we make improvements to our transporter room so that we have not one pad, not ten pads, but now we have 40.

Again, we still have to cope with the time it takes to dematerialize, transmit, rematerialize, and prepare for each Red Shirt. We can’t cut corners here, because we don’t want to lose anyone. But now our total throughput is 40 RSps,

Obviously, the time it takes to get the same number of crewmen aboard is less, so in comparison it’s faster as we have more throughput overall so it takes less time to clear everyone off the planet. Do we have more data? Yes. Is it faster? No. Is it better? I suppose it’s best to ask the guys wearing blue shirts right now…

Hope this helps someone. Somewhere. Maybe on a planet near you, and especially if you have a fondness for red shirts.

Comments

The flaw in this analogy is red shirts aren’t all saved. Some just don’t make it, so the 10/40 throughput should be 8/32

Okay, so help me out here, are you associating the 8/10 bit encoding here?

I would argue (as I have been known to do) that it IS faster. The mission of saving 40 redshirts will occur in 1/40th of the time with 40 pads as opposed to one. Shorter total mission time = faster. To actually apply this to storage I would argue time and again that 40G compared to 1G is faster when “under heavy enough loads”.

The amount of time it takes to do work is less, so from a workload perspective it is faster. In other words, you get more throughput for the same amount of time invested. If that means that you get done quicker, then yes it is faster. From the perspective of the crewmen, however, the time it takes to move from one place to the other isn’t faster, though they do not have to wait as long.

The red shirt matter streams are over UDP.

Hiya, nice article thanks. I came across dataaccelerator.com recently that actually reduces network latency with a machine learning program that learns queries and sends them all in one packet. what do you think about this?

Thanks for writing in. I’m not really familiar with dataaccelerator.com, and will have to take a look at it.

There are a few technologies on the market that are geared towards improving IO of a data stream. In storage, for instance, it isn’t uncommon for data I/O to be “bundled” back to the host from a storage array or appliance in order to use efficient network bandwidth. This is the principle behind Jumbo Frames for iSCSI, for instance (though I, myself, am not a fan of using JF for iSCSI).

There are a couple of SDN technologies that keep a pulse on the I/O in the system. I know enough to be dangerous about Cisco’s ACI, but I believe that’s one such technology. I think that Big Switch has something similar in their controller architecture too, but I can’t remember 100% (I could be wrong).

The key thing to keep in mind is that network latency itself is not something that can be arbitrarily reduced. Latency can be introduced into a network, but not eliminated (because it’s directly tied to hardware capabilities). What this means is that you want to think about how fast it takes to get to the last bit, not the first bit. Applications don’t actually care about how quickly it gets the first bit back – it only cares when the last bit arrives so that it can do what it needs to do to process that data. To that end, mechanisms that reduce the time to get to the last bit (like dataaccelerator seems to do) would be a good thing, yes?

The question then becomes about “what cost” is involved. Do we need to have jumbo frames or some other aggregation technology? Are there oversubscription requirements? Architectural best practices (e.g., do we need ECMP in order to make this truly work? If I have a Clos architecture is it even necessary?). Those kinds of things.

Of course, I’m just rattling off things that come to mind without having seen this particular product at all, so take it with a huge salt lick. 🙂