In the first article, I started talking about how to use the Synology to solve my various backup problems. I’m a bit picky about how the way I like my data organized, and as usual your mileage will vary, but because of the nature of my setup I need to use a variety of tools available within the Synology ecosystem.

In a nutshell, I wanted a backup methodology that ensures revisions, on-site copies for fast restoration, and encrypted off-site backups for “last-resort storage.”

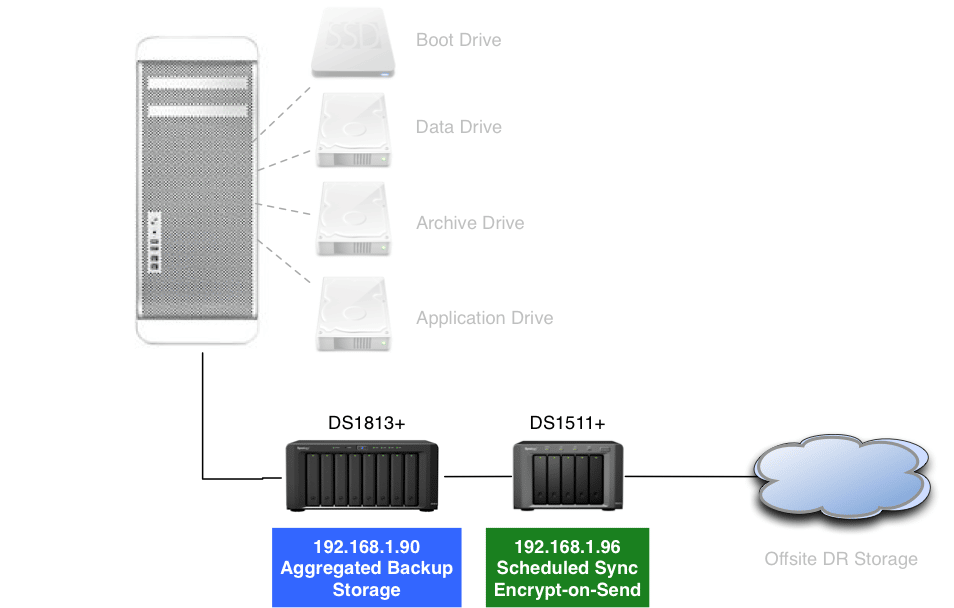

Let’s take a look at the first stage of this process – getting the files from the Mac to the DS1813+.

Equipment

For the purposes here, I have two separate Synology devices. The newer, faster unit is the one I’ve decided to use for my aggregation/syncing device. Not only am I using the device to mirror my Mac’s internal drives, but it is also acting as my media server, running Plex, and feeding my own, personal entertainment library, plus home videos, my amateur photography and audio projects, etc.

So, the 1813+ is not a good candidate as a backup location (best practice is to make sure that your backup device is only used as a backup device, and this definitely is not).

It is, however, a good first stage for collating all the data that exists on a number of volumes on the Mac, and then turn around and back them up (not sync) to the DS 1511+.

Both machines are running the latest version of DSM 5.2 (DSM 5.2-5592 Update 3), as of this writing.

Before We Get Started

It’s important to note, and I’m pretty sure if you’re reading this you already know it, but I gotta say it loud and clear: your mileage will vary. My setup configurations are based upon my own personal requirements, my workflow, and how I want to access my data when something goes wrong (there’s no “if” here; I know it’s going to happen at some point!). Doing backups is all about the restore, too, which means that I need to make sure I know how to get my data back as quickly and accurately as possible in tense, time-sensitive, and high-pressure emotional moments.

It’s important to note, and I’m pretty sure if you’re reading this you already know it, but I gotta say it loud and clear: your mileage will vary. My setup configurations are based upon my own personal requirements, my workflow, and how I want to access my data when something goes wrong (there’s no “if” here; I know it’s going to happen at some point!). Doing backups is all about the restore, too, which means that I need to make sure I know how to get my data back as quickly and accurately as possible in tense, time-sensitive, and high-pressure emotional moments.

To that end, the up-front work I’m doing (while I’m calm and collected) is supposed to help me have multiple opportunities to recover lost data in the easiest possible fashion (when I’m stressed and frazzled).

Disclaimer: The information here is for example and informational purposes only. As we’re talking about data loss, use at your own risk. I accept no liability whatsoever for your data, just like no one else does for mine. All these methods have been tested and worked on my system, not yours.

So, there are a number of tools in Synology’s toolbox that are not appropriate for my specific needs, and as a result I’m not going to cover them all in this blog series. If you follow my setup to the letter, you’ll be forced to my workflow, but also any errors I make along the way. 😉

Before we start messing around with the interface, it’s important to keep a couple of things in mind. The most important thing of all – and I cannot stress this enough – is always know where you are. Look at the diagram of my setup above, and think about all the different touch-points there are to keep track of:

- Mac: Boot Drive Source

- Mac: Data Drive Source

- Mac: Archive Drive Source

- Mac: Application Drive Source

- Synology DS1813+: Destination for Mac

- Synology DS1813+: Source for Synology DS1511+

- Synology DS1813+: Source for miscellaneous data sources

- Synology DS1511+: Destination for DiskStation1813+

- Synology DS1511+: Destination for Aggregated Mac Drives

- Synology DS1511+: Source for Cloud Storage

- Cloud Storage location: Destination for Synology

Why do I break it down this way? Because as you’ll soon see, when we start playing around in the Synology DSM GUI, we’re going to be doing multiple roles to get the job done, but often using the exact same tools for these multiple jobs. Worse, out of the box DSM looks exactly the same for both devices, and you’ll be flipping back and forth between them. Often.

To add to that, there are rules and limitations depending upon whether you’re the source or the destination for things like user permissions, shared folders, encryption, etc.

For example, I have a user on both systems called admin and backup, to do both of those roles, respectively. So, when I’m asked for my username and password, it helps to know which account I’m using to log in for backup and/or synchronization permissions!

Do what you can to make your life easier. For me, I changed the background on my DiskStations so that I have a visual cue for which one I’m working on, and as I’m using a dual-monitor setup I always keep all the work done the DS1511+ on the left monitor, and the DS1813+ on the right.

For the purposes of this article, we’ll only be working on one DiskStation (the 1813+), but in the next post it will be far more critical to know which device we’re working with.

I also use a more detailed version of the graphic above to help me understand the relationships between individual folder matches from device to device.

Methodology and Workflow

My natural assumption was that I should start with the data that I have, and try to figure out ways to protect it. After all, that makes the most sense – and I have a feeling that most people probably do the same thing (though this is probably one of the few times I’ll ever compare my thought processes to most people!).

However, it turns out in this case that really wasn’t the best way to do it. Sure, when you set up the policies, you’ll do it from the source to the destination (well, actually, it’s iterative, and you go back and forth between each device), but you plan from the end point backwards.

There are a few reasons for this.

First, what you eventually wind up with for a resting place for your offsite/long-term/last resort storage will dictate how you get data to it. Now, having said that out loud, it makes a great deal of sense and seems self-evident, but when you think from the source forward, you start to realize that the methods and policies you put in place (compression, encryption, versioning, etc.) may not be compatible at all with your chosen solution end-to-end.

In my example, I had originally wanted a syncing process to start with for the 1813, and then put some sort of versioning on the 1511 (the one closest to the cloud storage link). Only then did I find out limitations about not only encryption, but also versioning, and my choices severely limited my choice of cloud providers (and, as luck would have it, limited it only to the most expensive options!).

It was only when I started to look at the problem backwards that a more coherent process started to emerge (special hat tip to Franklin Hua of Synology who guided me towards this way of thinking!).

Don’t fool yourself: cost is a serious concern. Conventional wisdom dictates that “you get what you pay for,” and to a large extent this is true. We’ll go over this in a later article in detail, but if you’re not careful you can wind up paying a lot more money than necessary for the wrong solution because of your workflow.

You could use free tools, after all. I could set up a rsync server on my Mac and have been using it as my manual copying/backup method thus far. However, when handling large amounts of files I’ve found that rsync can be somewhat flaky on my system, which doesn’t lend itself for unattended backup too well.

Instead, Synology’s DSM 5.2 offers a pretty good suite of tools to choose from. The trick, of course, is figuring out which tools do what I want them to do. While the DSM help files are good about explaining certain check boxes, radio buttons, and tab interfaces, it does nothing for explaining the relationship between all the different options, nor why you want to choose one option over the other.

That onus is on the user (i.e., me).

Getting Data to the DiskStation DS1813+

I decided to use Synology’s Cloud Station sync to move the files from the Mac’s internal volumes to the Diskstation’s shared folder setup, setting up a versioning policy of 5 maximum versions.

Note: in my research I did come across known issues with Cloud Station sync for Windows users, which seem to be related to a combination of hard drive hibernation and multiple device access/modification of files. As I’m using this as an effective one-way replication, however, I decided to move forward with Cloud Station as the solution to start with.

More to the point, Synology has told me that the developers are aware of the problem and addressed several of the issues in subsequent releases since the initial post, and is working on resolving remaining issues for Windows users in an upcoming release. So, I think it’s worth updating the awareness to the new state of stability.

Originally I had intended to encrypt this backup, but for reasons that will be explained below, it turns out that this messed up the workflow considerably. Even though the Cloud Station sync process is 2-way, and I’ll only be using it 1-way, the Shared Folder Sync process in the Backup & Replication tool does not give me the ability to do the versioning that I want. Somewhere along the line, I want to be able to recover accidentally deleted files, and if I can’t do that on the DS1511+ due to encryption limitations (again, see below), this is where I need to do it.

These are the steps (and mistakes) I made in order to get it working.

Setup

Synology has put together a great tutorial for setting up Cloud Station client (both Mac and PC), and I’m not going to duplicate it here. It’s a short 3 minute video that will take you less time to watch than to read here, and certainly far less time for me to paste the link than to recreate the steps. 😛

[youtube://www.youtube.com/watch?v=kZX8XK9Wy2Y]

I am, however, going to go into some detail that the video and the help files do not cover.

Cloud Station sync

Once the Cloud Station was set up (following the directions in the video above), it was now time to connect the dots, so to speak.

I always think it’s good to start small, and of the choices my Data Drive was the smallest volume that I have:

So, I know what I want to synchronize, but the question becomes: where do I put it on the Synology?

The problem here is that the default setup is to place all the data in my synchronization folder into the Diskstation:<user>/homes location, which is not what I want. Using the backup account, that would mean that, by default, all of my backup files would be thrown into the directory DiskStation:backup/homes/CloudStation, like some child throwing all his toys willy-nilly into his toybox:

This would not help me or my logical workflow, as I want to be able to quickly see the progress of different backup tasks and/or (more importantly) quickly go to locations for restoration/recovery purposes.

I already have folders on my DS1813+ that I’ve been using to manually rsync my files, and I don’t want to create another copy of this data on the Diskstation (that, ultimately, defeats the purpose).

Instead, I want to create a shared folder on the DiskStation that I can tie together with the Cloud Station client software. Sadly, this does not appear to be possible, so it looks like I’m not going to be able to get what I want. Still, I don’t want the backup to go into the homes folder, as that isn’t intuitive to me.

Instead, I went into the DSM File Station utility, and created a new Shared Folder:

In the creation dialogue window, I filled in the appropriate fields (spaces are not allowed in the Name field), and chose to encrypt the folder (which, as it turns out, is not what I wanted to do at this point – more on this in a moment):

You’ll notice that I didn’t bother to worry about the Windows-centric check-boxes, as they’re only applicable if you’re using the Windows Sharing protocol. Even though “Recycle Bin” is a common Windows term, in this case Synology creates its own location for deleted files before they are actually removed from the system using the same name, and so it is not solely specific for Windows users.

This is about as close as you can get to recovering accidentally deleted files using this method that I’ve found. More information can be found in the help file about these settings.

One of the other reasons to choose the recycle bin option was that it creates an actual, visible folder at the beginning of the directory, so in case I’m looking at the two directories I can see at a glance which one is which so that I don’t accidentally mess with my original folder.

Note: I’ve found this #recycle element very useful. Because I’m trying to get mirrored copies of the data in multiple locations, there have been more than one occasion when I’ve found myself trying to remember which one was which. Especially when you’re have limited capacity and you want to remove testing attempts or badly configured folders, you want to make sure you’re in the right place (“always know where you are!”) before you start deleting files! Seeing the #recycle folder always lets me know that this is the backup/sync’d copy. If I don’t see it, I should not delete it.

Choosing the encryption option flags a warning message:

In my case, I qualify for all of these criteria (so I thought), and I clicked on “Yes.” As it turns out… not so much…

ahem. Presenting…

Some Notes on Encrypting Backups with Synology:

To my surprise, when the folder was completed a file called “Data_Volume.key” immediately downloaded to my Mac. I say surprisingly because there is nothing in the help file about this new document. It turns out that this is an encrypted file which is used for mounting the shared folder.

More to the point, Shared Folders can be mounted via this key file or via the pass phrase that was used when the folder was created. Admins can enable the “auto-mounting” of the encrypted folder for convenience (this way they don’t have to log into the DSM to mount the encrypted folder), though Synology does not recommend this.

Let me take a quick moment here and point out some caveats about encrypting shared folders:

- You cannot undo this

- You cannot encrypt a shared folder that’s already been created, either

- You must have them mounted in order to sync to them

- You cannot access an encrypted shared folder via NFS

- You can back up encrypted or unencrypted folders to an unencrypted folder

- You cannot back up an encrypted folder to an encrypted folder

- You can back up an unencrypted folder to an encrypted folder

- Encrypted folders have to be mounted each time DSM boots up, so keep your .key data file or passcode handy

- Cloud Sync can not read encrypted folders

- You cannot do “double encryption.” That is, you cannot have an encrypted folder then sent in an encrypted format (i.e., encyrption-in-flight)

Now in the last case, it’s not something that you really want to do anyway. Yes, it’s technically feasible, and certain models have the horsepower to do it, but the cost-performance benefit really isn’t worth it. You’d be hitting the CPU so hard as to practically make it unusable, so you’re wasting time, processing time, power, etc. It’s just not worth it.

But, here is the kicker that I didn’t realize when I set this up: Versioning and Encryption are mutually incompatible.

In my case, there were 2 choices for handling encrypted backup from which to choose:

a) store the data on the DS1511+ using shared folder encryption process, and upload that data to a cloud storage provider that can accept this type of data (which leaves only Amazon S3, Glacier, and Azure).

b) can use Cloud sync to take non-encrypted stuff, have Cloud sync encrypt the file and send it to the cloud file provider (which opens up several other Cloud storage options, such as DropBox, Box, etc.).

Thing is, Option B does not allow versioned backups.

- You can get versioning without encryption

- You can get encryption, without versioning

In a nutshell, this mutual incompatibility became a workflow obstacle. Once the encryption is thrown into play, it’s not possible to maintain the versioning, and vice-versa. Because you can’t undo encrypting a shared folder, I had to go through this entire process from scratch.

Fortunately, I wasn’t completely screwed in my plan, but this was a setback, I confess.

Beyond the encryption process, the next step in the process is to allow the backup user to read and write to the folder:

If you eventually wish to delete this folder, you’ll need to have administrator privileges for that. The backup user in my setup does not have admin privileges, however, so for good measure I gave admin the checkmark as well for read/write, since I do all my deletion locally to the DiskStation.

At this point, all we’ve done is create a shared folder and make sure that the backup user can connect to it. In order to connect from the Mac, however, I need to make sure that the shared folder Data_Volume is enabled in the Cloud Station server interface:

I think of it this way: Even though I’m creating a shared folder on the DS1813+, the DiskStation doesn’t know who it’s going to be shared with until I tell it. This is the way of telling it that it’s going to be shared with something remote through the Cloud Station.

It’s a little hard to see, but on the left-hand pane in the window above I had to scroll down to get to the mounted Volumes directory and choose the Data drive. Essentially this is the same representation as what I can see in the Finder under the Devices drop-down menu, as in the image on the right.

It’s a little hard to see, but on the left-hand pane in the window above I had to scroll down to get to the mounted Volumes directory and choose the Data drive. Essentially this is the same representation as what I can see in the Finder under the Devices drop-down menu, as in the image on the right.

As soon as you click Done, the client goes off to do the work. There is no summary page for you to peruse. So, away we go:

At this point the synchronization was already running. Immediately I could open up the Data drive in the Finder and see that items were marked for copying. According to Synology, there is supposed to be a blue circle for items in progress of copying, and another for items that have already been copied. However, there appears to be an issue with this reporting.

First, the window that opens when you click on the link in the Cloud Station client software (see the image above that shows the blue /Volumes/Data), it opens a finder window of the folder structure for the data drive. In the Finder, the performance (while Cloud Station is syncing, especially) is dog slow.

This is because of the way that the Cloud Station client interacts with the Finder to update all the different folder and file icons. Personally, I use PathFinder from CocoaTech as my Finder-replacement, and the slowdown issues are non-existent as Cloud Station client does not interact with it at all.

Second, the circles that indicate sync status (blue for in progress, green with checkmarks for completed), are simply incorrect. As you can see from the screenshots below, the Finder indicates that the sync process has been completed for several of the folders when, in PathFinder, you can see from the mounted Data_Volume drive that they have not.

As you can see in Path Finder, I’ve selected the Data_Volume backup directory and you can see that despite the fact that the icons above say everything is copied already, this is not the case:

Obviously, the only means by which to tell if this has happened is to open the files on the backup location (in this case, Data_Volume) and check to make sure that the file is intact. I attempted this with 5 different sync setups, of varying size and locations, and it appears to be universally true. It’s simply not a good idea to trust the Cloud Station client for correct status updates.

Fortunately, in the settings menu of the client application, you can turn those off:

Immediately when I deselected those options the Finder went back to its normal speed for listing items in the directory. I’ve been in contact with Synology about this and let them know that I’ve read some blogs online that have mentioned this as well, and they are looking into it.

Repeat As Necessary

All in all I have four internal volumes that need to be sync’d to the DS1813+. I follow the same steps for each of the volumes and I have up-to-the-minute copies of my main data now stored on my Diskstation, with a policy of a maximum of 5 versions.

Should I so choose, I can then make these folders available for use on other devices, such as my iPhone, iPad, or even other computers (such as my wife’s laptop) by connecting the devices to the Cloud Station. Those tasks, however, fall outside the scope of this article, however.

Deleting a Share Folder

One of the things that I found to be frustrating was that there is no one single location to delete a shared folder. Moreover, the help files don’t appear to have these instructions anywhere (I could have simply missed it, and I did look for it, but it is possible that I missed it somewhere – in any case, it’s not a simple button, but rather a series of steps you have to take).

Let’s say that I wanted to create a shared folder for my Archive volume, but then changed my mind (or, to put it more honestly, I accidentally place the Data backup folder into the Archive backup folder).

Here, you can see that I have created a shared folder called Archive_Backup with a single folder residing inside (“Data Backup”):

Did I have to delete it? No. But this is an example of what happens when I try to do so. As you can see, if I select the Action menu, all of the options – including Delete – are greyed out:

I can, however, select the Data Backup folder and get the option to perform actions on it:

However, I’m still stymied when I try to delete the main folder. Instead of selecting “Delete,” I canceled, wondering if I was getting errors because the directory wasn’t empty. It turns out it was simply that I was trying to delete from the File Station (silly me), which was the wrong place.

The reason for this is that it is a Shared Folder, and the act of deleting must be done from the Shared Folder tab in the Control Panel, not the File Station menu.

Once you’re inside the Control Panel -> Shared Folder, you can delete the folder as normal.

Once I figured out that I needed to be in the right place (see, I told you so!), it went off without a hitch.

That little checkbox wasn’t necessary in my setup, because I wasn’t doing any fancy OSX or Windows ACLs. Don’t worry, if you don’t know what those are, you aren’t using them either, most likely. However, if you find yourself unable to delete a shared folder and have scoured the interface to find that elusive checkbox, uncheck this, and you should be good to go.

This can be particularly frustrating because there are numerous places where your user permissions can be checked (e.g., Cloud Station settings, User settings, Shared Folder settings), but this little checkbox can hold you back like a sneaky little bastard.

Next Steps

So far, I’m a third of the way to my goal of a staged backup solution:

As you can see, we have not yet set up any of the Backup solutions – we’re still only at syncing and aggregating. In the next article, we’ll connect the Aggregated Backup storage DS1813+ to the actual Backup location, the DS1511+. After that, we’ll look at exploring cloud storage options for last-resort Disaster Recovery.

[Update 2015.08.26: Corrected errata about backing up encrypted folders. Corrected names of devices to their proper designation] [Disclosure: No payment was received for these articles. However, Synology provided me the Diskstation 1813+ (but not the drives), free of charge, for evaluation purposes. I also did get valuable help from Franklin Hua, Synology Sr. Technical Marketing Engineer, to whom I am especially grateful. Absolutely no editorial guidance was offered or solicited by Synology, other than to ask that I correctly identify their equipment. 🙂]P.S.

I’m very pleased that this has been useful, and I want to make more posts like this with updated hardware and software, so in order to do that I’ve recently created a Patreon account. If you want to see more posts like this – about Synology or anything else to do with storage – please consider sponsoring future content.

Comments

Pingback: Synology Hybrid Backup, Part 1 | J Metz's Blog

Pingback: Newsletter: August 27, 2015 | Notes from MWhite

Pingback: Synology Hybrid Backup, Part 3 | J Metz's Blog

How did you get CloudStation to select the SSD drive for your pro? I cannot select the main drive as a volume. The other volumes of mine, work well in doing this; however, I cannot select the main drive of my pro. Any suggestions?

The Boot drive was the last on my list to set up, and I tried to do it after I wrote the blog. However, I ran into the same problem you did. It’s not possible to back up the system folder from the boot drive, apparently, though I was able to select everything else under the root (“/”) tree.

I wound up creating a Boot_Volume location on the DS1813+ and selecting my user home folder as a starting point. That’s not good enough, however, so I had to look elsewhere. I bought a copy of SuperDuper! and created a bootable backup image of the SSD and saved it to the Archive drive, which in turn got backed up to the DS1813+ (so, technically, I have two copies of the user home folder, but the CloudStation one allows me to have versioning.

I was pretty disappointed about it, but I did find a workaround at least. Hope that helped.

Thats great. I’ll do the same, though I use Carbon Copy Cloner, it will do something similar as SuperDuper! . I already keep a bootable clone of the main drive, so I’ll back it up to the NAS as well.

I really liked your article, as it gave me some insight as to how to protect my data. I’ve had NAS backups via CCC and a BackBlaze offsite copy… but after reading your articles, I’m going to switch to using CloudStation along with versioning, CCC backups, and BackBlaze. Using CloudStation instead of solely CCC will simplify this and provide more timely backups.

I see talk and some implementations of BTRFS with some high-end Synology NASs…. I saw highlights of DSM 6.0 and its use of BTRFS… it will be interesting to see how this works out and if they make it available for older models. That file system will bring in some interesting features that will be worthwhile in users backup plans.

I’m really glad that it appears the blog series has been useful. 🙂

Unfortunately I didn’t get to join the DSM 6.0 conversation this past weekend. I need to try to catch up!

Pingback: Synology Releases DSM 6.0: New Backup Methodology | J Metz's Blog