On June 13, I was a guest on The Hot Aisle Podcast, entitled “Fibre Channel is Dead, Right?” with Brian Carpenter and Brent Piatti. It’s by far one of the most in-depth technical conversations I’ve ever had on a podcast, and I thoroughly enjoyed it. Brian had read an earlier blog I wrote about The Grand Unification Storage Theory, and wanted to have a discussion about it.

On June 13, I was a guest on The Hot Aisle Podcast, entitled “Fibre Channel is Dead, Right?” with Brian Carpenter and Brent Piatti. It’s by far one of the most in-depth technical conversations I’ve ever had on a podcast, and I thoroughly enjoyed it. Brian had read an earlier blog I wrote about The Grand Unification Storage Theory, and wanted to have a discussion about it.

While the title of the Podcast hints about Fibre Channel, we spent a great deal of time talking about NVM Express, and the implications for the storage industry. Specifically, we covered a lot of ground about the nature of storage and some of the competing forces that affect the decision-making process that prevents a one-size-fits-all solution.

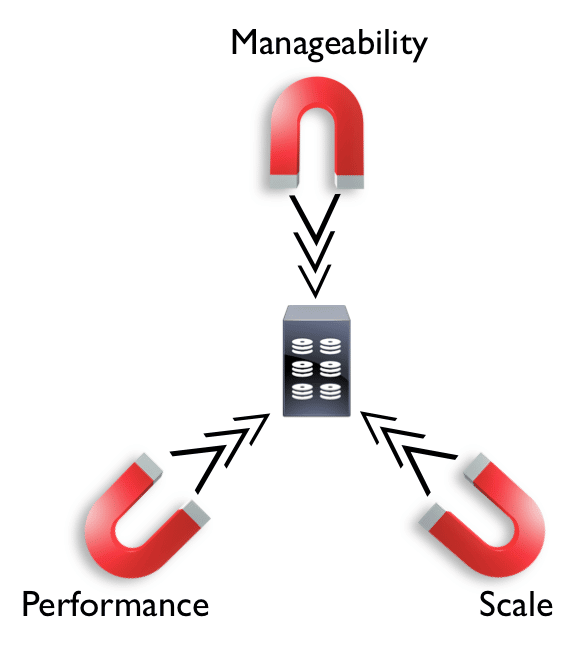

During the podcast, starting at around minute 13, I started talking about how there are major pressures in the storage world that actually pull people in opposite directions. It’s a bit difficult to verbally describe a visual, so I thought that I would augment the podcast with some pretty pictures here.

The Storage Triangle

I’ll be the first to say that none of this is particularly new or earth shattering, but if you are familiar with the storage industry (data centers, service providers, hyper-converged, etc.) you will notice some pretty common themes – everyone has the solution you need.

However, it’s not quite that simple.

Everyone who works in storage (or consumes storage, or analyzes storage, etc.) typically understands – even intuitively – that it is a very big, complex world in which they live. People outside of storage see it as this monolithic block (and yes, this includes many people inside my own company).

That’s because when we look at storage from the outside, we only see a black box system that needs to meet our needs for whatever we’re running – whether that be our own personal NAS or Google’s exabyte-level storage needs – and as a result we look at it through a particular lens.

For example, whenever you talk to someone who says that “Big Data” is the greatest problem in storage that needs to be solved today, they’re really living in the part of that triangle on the lower right. Whenever you talk about someone who is into “Hyper-Converged,” they’re pulling at storage from the top. When you’re looking at Micron/Intel’s 3D XPoint and NVMe-based PCIe technologies, you’re on the lower left.

Each of these types of storage solutions are a big deal, and the questions that arise – as well as the problems that are required – are often diametrically opposite from one another.

“Doing” Storage

When a person says that they “do storage,” it can mean a variety of things. Many people say that they “do storage” the way that I tell non-technical people that I “do computers.” It’s vague enough so that you can give them an idea of what I do without them needing to understand Data Center architectures. Plus, it’s a good starting point to see how far their eyes roll back in their heads.

Vendors with storage products that “do storage,” on the other hand, take a similar shortcut. It matters a great deal who your audience is. If you talk to a server-based person, “doing storage” could mean direct-attached storage, high-performance devices that reside inside (or very, very close) to the server, or it could mean management constructs such as hyperconverged systems that are designed to be implemented and managed by server admins (as opposed to storage admins).

Talk to those storage administrators, though, “doing storage” often means the manageability of large, multi-million dollar setups of storage arrays that serve thousands of servers, with redundant capabilities up the wazoo.

Let’s not forget the storage network admins, for whom “doing storage” means getting storage from a server to one of those storage devices reliably and quickly. Whether you have a dedicated storage network or a shared storage network makes a big difference.

Thing is, there is a reason why large storage companies (such as EMC, NetApp, e.g.) have many different kinds of products. These trade-offs for performance, scalability, and manageability (not to mention price, which I’m omitting here for the sake of simplicity) are inevitable, and they have known for years that there is no “one-size” approach.

The same goes for storage networks. Some networks are faster than others. Some are easier to manage, some are more flexible. Some are purpose built, others are off-the-shelf. The trick is to match the right storage network with the correct storage solution that answers the particular forces that are affecting your needs.

Two Out of Three

That matching isn’t trivial. Even assuming that you’ve solved that matching issue, there is still the problem with the forces themselves.

The best you can hope for out of this is to lasso two out of the three, and even that is very, very difficult. Most storage solutions that want to do their job very well, focus on getting as close to the point of that triangle as they can.

Now, you may be wondering where other types of storage architectures fall – the general purpose, “enterprise-level” storage solutions. In reality, my little triangle is not axiomatic – it’s simply a lens through which to think about storage forces. However, most enterprise storage solutions need to balance between these three forces according to whatever use cases dictate.

Now, you may be wondering where other types of storage architectures fall – the general purpose, “enterprise-level” storage solutions. In reality, my little triangle is not axiomatic – it’s simply a lens through which to think about storage forces. However, most enterprise storage solutions need to balance between these three forces according to whatever use cases dictate.

Ultimately, that’s the purpose of my original post. When you have very unique needs in any organization, it is simply impossible to ensure that any one solution is going to solve any and all problems, because you will always need more. More capacity, more performance, more manageability, etc.

Beware The Storage Rodeo

In the past couple of years the demographic for storage has been changing, and that’s not necessarily a bad thing. However, the new storage architects come from a very different world. This new blood comes from the world of development and operations (DevOps), server administration, and programming. They’ve benefited from Moore’s Law to the degree that almost any solution is “good enough,” and any solution that they’re familiar with will satisfy the bulk of their needs adequately.

Vendors, on the other hand, don’t have that luxury. I bristle whenever someone comes to me and says “you don’t need storage,” because their patented so-and-so-technology solves all the world’s problems. In reality, they’ve managed to identify one of the forces pulling on the triangle and are ignoring the other corners. How conveeeeeeenient.

Vendors, on the other hand, don’t have that luxury. I bristle whenever someone comes to me and says “you don’t need storage,” because their patented so-and-so-technology solves all the world’s problems. In reality, they’ve managed to identify one of the forces pulling on the triangle and are ignoring the other corners. How conveeeeeeenient.

This creates a nasty wakeup call for consumers of storage when the natural forces that aren’t covered by “so-and-so-technology” inevitably come into play. Large scale storage becomes unwieldy, so new management structures need to be put in place. Every time you add management structures, your performance takes a hit.

Or, look at it from the other direction – high performance, in-server storage makes workloads go much, much faster. Faster completion times means more work per second, and a huge cost savings, so you want more of it. But scaling high-performance storage without losing that performance is very, very hard. Doing so while not making it fragile is even harder.

Now think about this: that’s just the storage. What about the storage network? You know, that piece of technology that hooks everything together?

Now think about this: that’s just the storage. What about the storage network? You know, that piece of technology that hooks everything together?

What happens when you’ve matched up your storage and network perfectly – only to find that the forces are causing you to be pulled in directions that no longer make your storage solution and the network align?

What do you do then?

This is the part that catches a lot of people out. They may find a solution that works right now, but changes over time affect not only their storage needs, but their ability to access that storage – a.k.a. the storage network. It’s not always about growth, either – sometimes you can get blocked by management obstacles or performance obstacles too.

I understand – probably as well as anybody can – the nature of storage protocol religious arguments. It’s very easy to get wrapped around the axle on the benefits of one protocol over another.

However, dismissing the discussion as “well, one is just as good as another” is equally as misguided. If anyone tells you that the storage network (or its protocol) don’t matter, they either don’t understand what they’re talking about or they’re selling you something.

What The Future Holds

Every year we have improvements that address each of these different storage forces. This is true for storage devices just as it’s true for storage networks. As these technologies improve, they will cover more and more use cases.

However, there have been several use cases we’ve never been able to handle, as well. Ultra-high performance persistent storage devices and networks (i.e., sub-microsecond latency) have been only a pipe-dream. Conversely, globally addressable storage (e.g., “cloud” storage) has only in the past few years been feasible with any real reliability or performance to be usable. These trends will also continue.

The forces on storage are constant and ongoing, and so we’ll always have a need for the right tool for the job, which means that there will likely never be “one storage (protocol) to rule them all.”

P.S.

I’ve recently created a Patreon account. If you want to see more posts like this – about Synology or anything else to do with storage – please consider sponsoring future content.

Or, if you simply want to show your appreciation for helping you with this problem, you can do a one-off donation. All moneys go towards creating content. 🙂

[simple-payment id=”7192″]

Comments

Update: Today this website transferred to a new hosting company, but during the transition process a couple of comments came in that did not get transferred. I’m entering in them here manually for the purposes of completion.

Problem with storage is in the name itself. For decades it’s been the entity that ‘stored’ your data in a vault. ‘Access’ to that data was secondary. Sort of like a traditional bank. You ‘bank’ your funds. ‘withdrawal’ was secondary.

The world has moved in to API driven code. Incredibly fast, actually – and encouraged by a large part by AWS.

There is only room for data in two places in future – Object store that primarily ‘stored’.

And distributed data INSIDE SW stacks like HDFS and Databases and Cassandra et la.

No more self-conscious, central-depository-bank like storage any more.

Just data – as you need it – accessible via API – like credit-card/PayPal transaction.

Bankers not welcome!

Not sure why you think its not possible to get all 3 (manageability, performance, and scale), time to think out of the box, also think a major transition is going up in the abstraction just like we move from VMs to Apps, we need to move from managing storage to managing data.

Check out iguaz.io, latency in the microseconds, capacity in the Petabytes, managed as a PaaS, with higher level APIs/services and data level security.

Yaron

@Som Sikdar

Hi Som,

Thanks for commenting. (Just a quick note – I’m in the middle of transferring the site to a new host, so if this comment doesn’t make it I’ll do my best to manually enter it in again once things settle down.)

I think you might not be surprised to find that I disagree with you. As I write above, I think that “fast enough APIs” are what happens when the rising tide of Moore’s Law lifts all boats. Don’t get me wrong: for certain deployments, you certainly have an argument to be considered. The Venn diagram that covers use cases has been expanding to embrace a larger number of possibilities. That is definitely a good thing.

But let’s take the other extreme – high-performance persistent memory. If I want to have ultra-high performance storage inside of a server directly connected via PCIe, do I want to insert a completely unnecessary object store in the middle to act as a Logical Block… what? Address? Mapping System? I don’t even know what such a thing would be called.

In your argument, you’ve lasso’d the top and right corners of the triangles and said that the performance corner is “good enough,” even if it’s not covered. Ultimately, I think it reinforces the point I was making.

Thanks again for taking the time to send in a comment!

@Yaron Haviv

Hi Yaron,

A quick point of clarification. I did not say you couldn’t get all 3. I said that tradeoffs necessitate that when forces pull on your storage solution (greater performance, greater scalability, greater management capabilities), something will inevitably have to give. When you couple that with a storage networking solution – with its own forces acting upon it – you will suffer from an inevitable gap somewhere in your system.

I have not heard of iguaz.io yet, so thank you for bringing that to my attention. I’m looking forward to seeing what is behind the hero numbers.

Thanks for responding!

@J Michel Metz

The trick is simple, many apps choose between memory access and flash/disk at the app/API level, a better solution is to store and manipulate or search “Data Structures” (mapped to common API notions), they land in ultra high-performance & resilient NV cache with Atomic access (reduced IO overhead), and can be destaged to slower flash/disk based on usage or policy, so you have one API to use, automated tiering to handle capacity, and offload IO chatter (i.e. move app logic/serialization to the storage, buy one system vs 2-3, eliminate the issues of cache inconsistency and journaling), having local PCI Flash doesn’t solve much since you still need to replicate over the network and have high FS/Middleware overhead, it also contradicts the stateless and elastic notion of cloud-native apps.

if you store & index those data structures in a smart way you can transform them on the fly to be read/written through different APIs (seen as files, objects, tables, message streams, ..) w/o involving Data Copies, and can accelerate apps and make them scale by an orders of magnitudes (low-level disk perf is never the issue, problem is IO serialization in the upper layers)

doing a Data Object lookup is quite fast, we do it in 200ns (using Intel HW assist)+ deep classification in 300ns, Today networks are very fast, in HPC & FSI land Apps round-trip in 1-2 microseconds, but it require using low-latency tricks and OS bypass, NVM is ~1 micro, so do the math with 2 replica, it is in most cases faster than the overhead introduced by the FS/middleware level (see: https://sdsblog.com/2015/11/19/wanted-a-faster-storage-stack/), indeed things we do is reserved for a small group of crazy real-time geeks and sounds impossible.

but the key point to address the 3rd angle of management is to move from managing Storage to Managing Data, this is what all the Cloud providers are doing, just like we now switch from managing VMs to App containers, can see my latest post in: http://www.information-management.com/news/data-management/the-next-gen-digital-transformation-cloud-native-data-platforms-10029217-1.html

i agree with Som, the discussion need to evolve to looking at it through functional APIs and from a full stack perspective, and APIs doesn’t have to be slow HTTP based ones

Yaron

Nice post! You’ve captured a part of why there is still a demand for a variety of storage products and we’re not all hosting everything on in-server flash. I agree that definitely there is a shift – and it’s hard for those of us who are younger to understand what the ‘bad old days’ were like, so accessible is data in our personal lives. The bar has been set higher for manageability and scalability (and the price has been set lower, of course!) and so we need to reset our baselines.

I haven’t listened to the podcast yet but it’s now on my list – thanks!

(Disclaimer – I work @ NetApp)

Pingback: Quora Answer: How to Choose A Storage Vendor? – J Metz's Blog