This is a question that came in from Quora, and I thought it was worth replicating here.

I think it is right to presume that the questioner is referring to “Fibre Channel” when the question says “SAN.” iSCSI is, in fact, a SAN (Storage Area Network) technology.

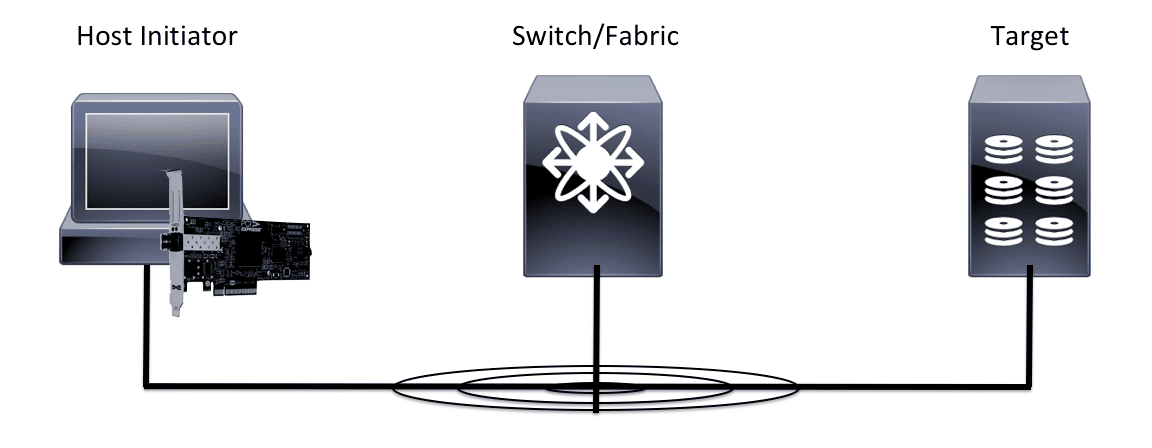

A SAN is designed with some common elements. This is true for both Fibre Channel (FC) and iSCSI. It’s also true for Fibre Channel over Ethernet (FCoE; though for simplicity’s sake I’m not going to talk about FCoE). It’s these design elements that make a SAN, a SAN.

What this means, then, is that the differences in performance (what makes something faster) is often due to a few different elements:

- How fast is the wire (link speed)?

- How close to the wire is the protocol (storage stack)?

- What is the architecture of the network (network vs. fabric)?

Fortunately, the FCIA has a lot of presentations that cover most of these concepts.

I will, though, talk a little bit about it here.

How Fast is the Wire (link speed)?

For years, this has been the means by which people look at performance. After all, it’s really easy to do that – 10GbE vs. 16GFC. FC “wins,” right?

Or, more currently (putting speeds always dates posts like this): 100GbE vs. 64GFC. Ethernet “wins”. Or does it?

It turns out that the numbers that you see are not actually the numbers that go across the wire. 100GbE is not actually 100Gb. Instead, it’s 10x10GbE, or 4x25GbE, or 2x50GbE. 64GFC is onelane of 64GFC.

Except it’s not. 64GFC is a classification of Fibre Channel link speed. It’s actual speed is 57.8Gbps.

The point being, what happens underneath the numbers matters. It matters a great deal, because it represents not only how much data can be on the wire at the same time, but also how that data relates to each other.

Just like having 122,000 miles of string in 3″ lengths, chopping up your links can have profound impacts on performance and congestion, as well as overall utility. Having said all that, the capabilities of modern switches – both Ethernet and Fibre Channel – are more than satisfactory for most SAN traffic use cases.

That is, if you’re only pushing 4Gb of SAN traffic, the link speeds of Ethernet and Fibre Channel are mostly a wash.

How Close To The Wire (Protocol Stack)?

This leads us to an important question – if the link speed isn’t the most important thing, what is?

All protocols have a “stack.” It allows important changes to be made at a layer so that other layers aren’t affected. Both Ethernet and Fibre Channel (and others) have their own protocol layer stack.

This is Ethernet’s OSI Model that is familiar to most people who work in networks:

And this is the Fibre Channel model:

You’ll see that the both start at the bottom, with the physical interface. This is, after all, the most common component of all – the actual wire.

Here’s the general rule – the closer you are to the wire, and the “thinner” the layers are on top of it, the better performance you’re going to get.

But, here’s the tradeoff. The closer you are to the wire, the more rigid the architecture has to be (we’ll talk about architecture in a second). That is, the more unforgiving and the more reliability and resilient the system has to be.

Reliability and resiliency is not cheap.

The question about these layers become very important where you start to think about how the SCSI layer – the actual storage communication protocol that sits on top of Ethernet or FC transports – connects into these models.

In Ethernet, SCSI operates at the very top of the stack. That is, Layer 7 – the Application layer. There are 6 layers to go through for each frame before it gets to the wire at the bottom.

In contrast, Fibre Channel is considerably more streamlined. Technically, there are four layers underneath SCSI before hitting the wire, though one of them (FC-3) is not often implemented. There is a tremendous efficiency here, which significantly helps reduce overhead for each message that is sent.

[By the way, I’m using SCSI as the example here, since the question refers to iSCSI SANs. NVMe works the same way in Fibre Channel, but is a bit different on the Ethernet side. That’s best left for another answer.]

What is the Architecture of the Fabric (Fabric vs. Network)?

As I said, the closer to the wire you get in the protocol, the better performance you get. Unfortunately, the tradeoff is that you wind up with far more rigid architectures.

Fibre Channel uses one routing protocol – FSPF (Fabric Shortest Path First). And the design architectures are built around that protocol when it comes to moving data between devices. Ethernet, on the other hand, has dozens of routing protocols. The permutations of how packets get from one end of the network to the other (or not), is truly dizzying.

There is a bit of an interesting situation that emerges as a result of this limitation. Because of this restricted nature of Fibre Channel, you also get far more control. When you have more control, you can have greater predictability in your traffic.

Fibre Channel works under a principle called Deterministic Networking. That is, every single piece of information is pre-determined before it ever starts. Every device in the network has a relationship with other devices, and that relationship is known and configured before it’s ever plugged in.

This is what makes FC a fabric. The entire network acts as a single unit, with every piece working in concert to move the data back and forth. The magic that makes this possible in FC is called a Name Server.

Now, this is probably one of the most important answers to the original question. Why is a FC SAN generally better performant than iSCSI? It’s because of this.

A Fibre Channel Name server knows everything that goes on inside of a FC fabric. It’s a repository of information for every component that makes up the network. Less like a “big brain” and more like an orchestra conductor, it oversees everything, making the entire fabric work as a whole, and coordinating traffic movement.

Ethernet, on the other hand, is a Non-Deterministic network. It relies on best-effort delivery, and entire mechanisms are put in place to block paths (e.g., Spanning Tree) or attempt recovery (e.g., TCP) when things go wrong. In Ethernet, performance – especially under load – is highly unpredictable, and as a result Upper Layer Protocols (ULPs) are put in place to ensure that your traffic gets to where it is supposed to go… eventually.

Whenever you place storage traffic on top of that type of network, you may wind up with amazing hero numbers (100G, for instance), but consistency over time is something of a wild card. In Fibre Channel, however, that consistency makes scaling devices considerably easier, as the entire design is pre-prepared, deterministic.

Performance, especially storage performance, loves consistency.

Bottom Line

There is more to this, of course. This is just a short (by standards comparison, anyway!) explanation of what impact performance in a generic SAN, and how Fibre Channel and Ethernet differ. More intermediate and advanced readers will complain that I didn’t talk about oversubscription, fan-out ratios, buffer-to-buffer credits, lossless, or any other of a host of different things that I omitted.

And they are right. I didn’t talk about them.

It doesn’t mean that they’re not there, or that they’re not important – they are. It just means that there’s far more to understanding how networks and fabrics affect performance than meets the eye.