What is the recommended size for hard drives used in a RAID 5 configuration?

This is an excellent question. I have a feeling that the ‘gut instinct’ answer may seem like “as large as you can get.” However, that is not the case.

For those unfamiliar with RAID, at a high level it’s a way of organizing data across more than one drive so that you can get added capacity with either better performance or better resiliency.

There is always a trade-off. RAID level 5 is a good compromise in getting better performance and resiliency while not eating up too much capacity and keeping risk relatively low. It requires a minimum of 3 drives.

Any time you have a tradeoff, you have a ‘sweet spot’ of effectiveness. With RAID 5, that sweet spot has to do with the right size (in terms of capacity) of the drives, the number of the drives, and some environmental factors that are too convoluted to go into at the moment. Suffice to say this - drives can get hot during operation, and you want to make sure you don’t stuff them in the bottom of a closet with no airflow.

RAID is all about risk management and avoiding data loss. Some RAID levels (like 0) are all risk and all data loss if things go wrong, but it’s the fastest level out there. So, if you have work that you want to do that requires a lot of speed but you don’t care about whether you lose the data, RAID 0 is okay. Video editing swap files, for instance, can fit into this category.

RAID 5, though, is usually something where you have files that you want to keep for a long time. So, that means that you’ll likely want to have a lot of capacity. That means there is a temptation to get very large drives (e.g., over 6TB in a home NAS) and several of them. Many home NAS devices allow you to have 6, 7, 8, or even 12 drives.

However, keep in mind that as you add more drives, you add more risk. With RAID 5, you can only afford to lose one drive. That means that if you have a RAID 5 with 12 drives, if any two of those drives fail, you are now at risk of losing all of the data on the remaining 10. So, if a single drive does fail, you want to make sure that you can get a new one in there ASAP so that you don’t risk a second one dying on you and screwing up your whole day (and trust me, that’s a very bad day).

This is where the tradeoff comes in.

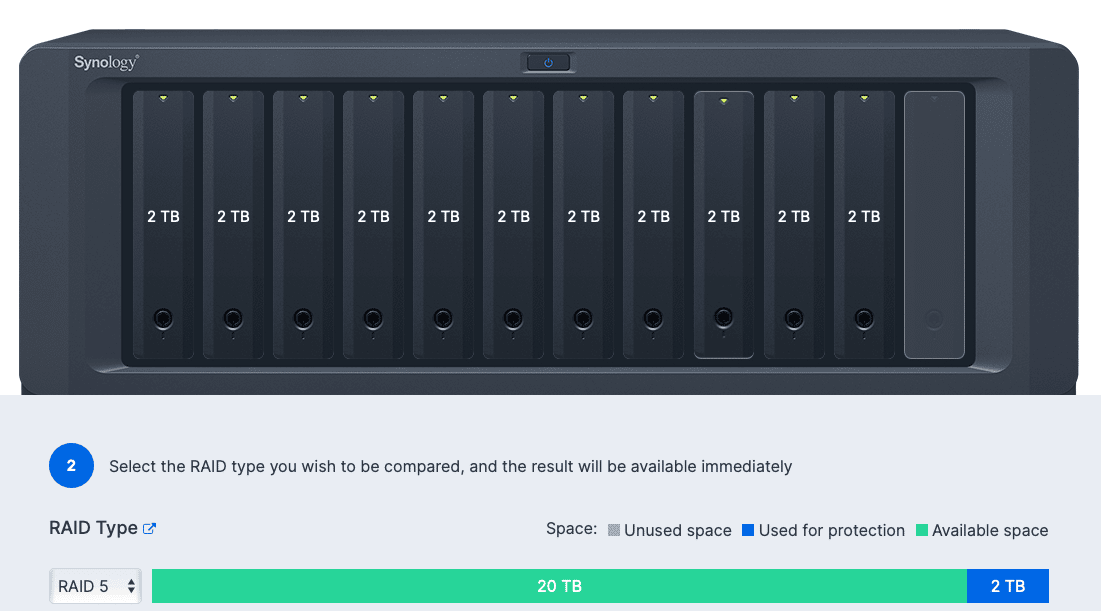

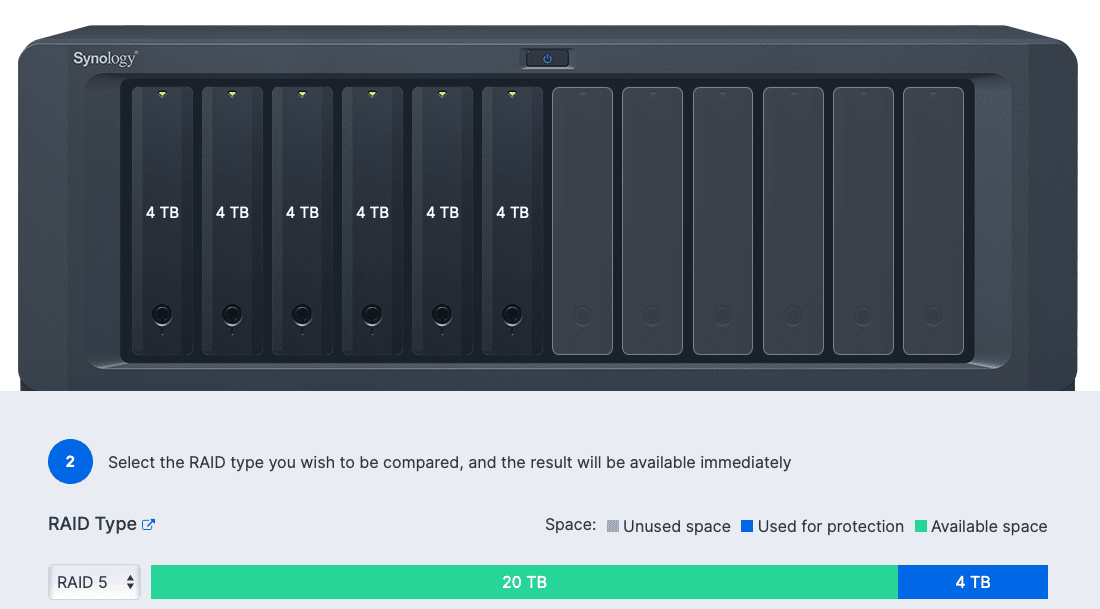

Let’s say you need a 20TB volume (I’m trying to keep the numbers simple). If you have 2TB drives, that means you’ll have to have 11 drives (you can lose one drive, so you need to be able to fit 20TB of capacity on enough drives to still have one that can die without you losing data. 11 x 2TB = 22TB, minus 2TB drive for protection = 20TB.

The Synology RAID calculator makes a great visual for this:

Okay, so now the risk of losing a drive has gone up considerably. The chances of any onedrive failing goes up the more drives you have. If you had 100 drives, for instance, the chance of any one drive would be a lot higher than any one drive failing with just 5 drives. It’s the law of averages.

So, let’s say you reduce the number of drives but increase the capacity. Instead of 2TB drives, you now use 4TB drives. Now, instead of 11 drives, you only need 6 to get to your 20TB capacity number. Good, you’ve reduced your exposure on drive count, you’re still at 20TB, and if you get a good deal on 4TB drives then you’ve even saved money on the $/GB cost.

But what happens when you lose a drive? This is where it gets tricky (and painful).

When you lose a drive in a RAID set and put in a new one, the process for getting that new drive up to speed and part of the RAID is called rebuilding. (Note: You may hear this referred to as ‘resilvering.’ Resilvering is supposed to refer to fixing a RAID 1 mirror set, the way you would resilver a mirror to get it reflective again. Fixing any other RAID set is called “rebuilding,” but this is a minor nit and as long as you understand what is going on, it doesn’t really matter what you call it 🙂 ).

For 4TB drives - like the ones we’re talking about here - that can (and often does) take weeks.

Weeks. Not hours. Not days. Weeks.

Oh, and at the time of this writing, you can buy 22TB drives (affiliate link). Imagine how long those will take to rebuild.

This is more than just an annoyance; this is a huge problem for the risk of data loss. Remember: the conditions under which one drive failed are the same conditions the other drives are experiencing. If you didn’t follow my advice above and kept your NAS under your laundry in the closet, there’s a good chance that the reason why the first drive failed is also going to affect your other drives.

That means that for the entire time your RAID set is rebuilding (weeks!) you are at risk of losing everything if another drive fails during the process. Not only that, but the more full your volume is, the longer it’s going to take. All the while, your volume is now in what’s called a degraded state. You better suck it up and buy some external hard drives to copy your data onto toute suite if you haven’t already got a backup for the NAS.

But you do have a backup policy for your NAS, right? 🙂

What does all of this mean? If you’re going to go larger than 4TB drives, and you actually care about your data lasting through a crisis, you’ll want to consider using RAID 6 (withstand 2 drive failures) at a minimum.

Bottom line: storage capacity is cheap. Data loss is outrageously expensive. There is a crossover point where the risk you place yourself increases logarithmically. The more data you have that you want to keep, the more you're going to have to take into consideration. The enemy here is time.

Once you start getting into the “my god I’m going to be losing everything I have in the next month” kind of paranoia, you start to realize that investing in very good backup strategies and data archiving - yes, even for home use (it doesn’t have to be enterprise-grade stuff, just good, consistent policy) - is far less expensive than losing your data.

[2022.12.13 An earlier draft of this document discussed the impact of fragmentation of files that was misleading, ultimately. I've removed the offending text to keep the point focused and clean.]