This is my answer to a question that came in via Quora:

When should a administrator use a storage area network technology and when should he use a network area storage technology?

“Network Area Storage” is not a commonly used term. The correct expansion of the NAS acronym is “Network Attached Storage,” which might make the the comparing/constrasting make a little more sense.

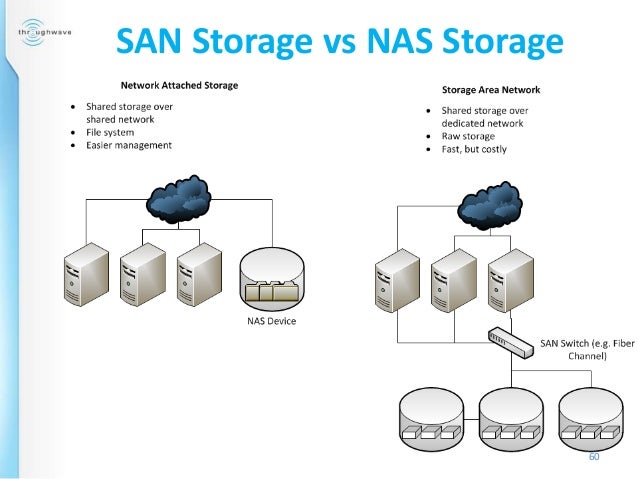

The decision to use one versus the other is not necessarily an “all or nothing” deal. Administrators almost always find use for both block storage (upon which SANs are based) and file storage (upon which NAS devices are based).

The decision to use one versus the other is not necessarily an “all or nothing” deal. Administrators almost always find use for both block storage (upon which SANs are based) and file storage (upon which NAS devices are based).

What is block storage, and what is file storage? Bear with me a moment and I’ll try to synthesize, at the risk of oversimplifying.

Block Vs. File

Block storage is the foundation for all storage. It refers to the ability to directly access the physical storage media (whether it be spinning disk or Flash SSDs). Obviously, the more directly you can access the media, the faster the performance (fewer software layers to get in the way and slow things down). Also, the the more direct you go, the more unforgiving it is (fewer software layers to protect the data and transport).

All storage is built on blocks

Generally speaking, networking protocols that access block data, like Fibre Channel, Fibre Channel over Ethernet (FCoE), iSCSI, NVMe, etc., are used for very fast access where a host (server) needs to own the volume. That is, the disk space is reserved for that host on a 1:1 basis – no sharing allowed.

This is very good for highly transactional applications and structured data, such as Databases (for example). It’s also very good for boot drives. Effectively, anything where data changes a lot, and changes frequently, is a good candidate for dedicating the media to the host and using block storage are networks like the one mentioned above.

File storage can be thought of sitting atop block storage. All files need to have access to the blocks on disks – the real question is what device actually does that translation? Your operating system on your laptop, for instance, has a file system that sits atop the block storage of your internal device.

File storage can be thought of sitting atop block storage. All files need to have access to the blocks on disks – the real question is what device actually does that translation? Your operating system on your laptop, for instance, has a file system that sits atop the block storage of your internal device.

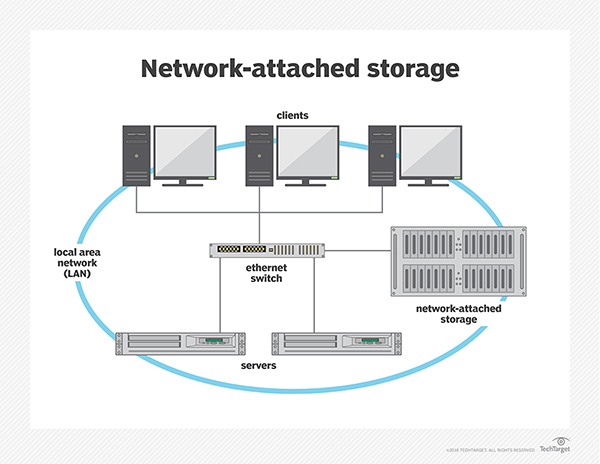

A Network Attached Storage system does the same thing, and then shares out a directory via a network, where it is mounted onto a host (can be a server or a client – my home Synology shares out a directory that I can access via my Mac as a folder in my Finder).

In common usage there are really only two protocols to choose from: NFS (Network File System, an open standard) and SMB (Server Message Block, a Microsoft standard). You’ll hear CIFS used as synonymous with SMB, but that protocol has been deprecated. AFP is Apple’s proprietary system which is sometimes still used, but Apple has moved away – or starting to – from it as well (IIRC).

The key advantage to NAS is that it can do either structured data (like databases) or unstructured (a loose collection of files, such as images, videos, etc.). It’s designed to support a large number of clients and, most importantly, share the media (SAN volumes cannot be shared).

Some examples of structured vs. unstructured data (Photo Credit: Debobrat Paul’s Business Intelligence Blog)

As a result, the oversubscription ratio for NAS is considerably higher than it is for SAN (oversubscription refers to the ratio between hosts and media: a typical FC SAN goes between 4:1 oversubscription to 16:1, maybe as high as 20:1. A NAS device can have oversubscription ratios around 50:1 or 100:1 – I’ve seen higher).

Oversubscription is a way to avoid wasted bandwidth (Source: Cisco)

Workloads

Each workload has a unique signature that lends itself to matching up nicely (or poorly) with a corresponding storage networking protocol.

- Write versus Read %

- Access pattern – random versus sequential access

- Data v. Metadata

- Data Compressibility

- Block/Chunk size

- Metadata command frequency

- Use of asynchronous/compound commands

- I/O Load – constant or bursty

- Data locality

- Latency sensitivity

Fortunately, Data Center application vendors have good understanding of what the impacts/requirements are, and will list them off for you when planning your environment.

There’s also the issue with the “noisy neighbor” and “I/O blender” effects, which is a consequence of virtualization. Applications with different I/O types are placed into different VMs on the same physical server, and I/O predictability goes out the window. Mobility also becomes a problem at that point, as arrays often depend on Hypervisors for data protection features and storage provisioning (also called “Hypervisor Huggers”).

For those kinds of environments, changing to a NFS environment from block helps, because each virtual disk has its own connection through the network and doesn’t require LUN locking (like block storage does), and natively retains much of the info that is lost in the I/O blender on the block size.

However, legacy NFS servers are poorly suited to operations on individual files (before NFS 4.1), and many NFS servers are incapable of handling the volume of I/O load generated by server virtualization (see the part about oversubscription above).

Protocol Wars: SAN v. NAS

Yes or no?

There is more than performance in determining whether to use a SAN versus a NAS, though. There is the cost, durability, reliability, management, scale, and expandability (not quite the same as scale) concerns as well.

You’re going to get a lot of opinions on this. In fact, emotions get so high, so fast, that it’s not uncommon for misinformation to be among the first “arguments” put forward. There are differences, and there are similarities, and there are even overlaps in functionality and capabilities.

FCoE is FC, for example, just running on a different wire. You’ll architect and manage it exactly the same way as a normal FC SAN. iSCSI is an incredibly robust and well-understood technology and is highly appropriate for certain environments. NVMe over Fabrics is an emerging standard that will be able to address NVMe devices (very, very fast SSDs) over a network) – they each have their “sweet spot.”

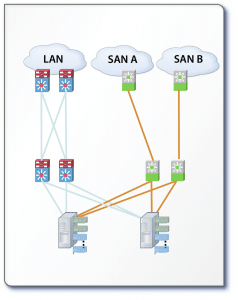

FC: High degree of control; high degree of rigidity (Source: Cisco)

The downside about physical Fibre Channel is that it requires its own network and network equipment, as well as the teams to manage them. The upside of Fibre Channel is that it’s a rock-solid means of moving data around when you can’t afford performance hiccups or downtime. All data center FC solutions are qualified and guaranteed to work end-to-end, from Operating Systems through Adapters via Switches to End Target Storage Devices. FC is the go-to protocol for databases and most Flash deployments (I’ve even written specifically about Fibre Channel and NVMe, if you’re interested).

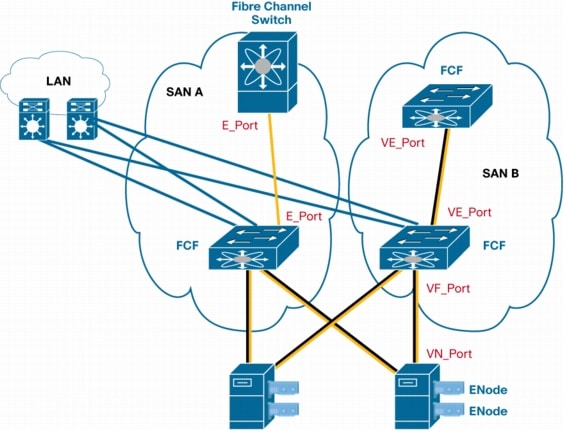

FCoE helps consolidate from host (at bottom) to first switch (Source: Cisco)

The downside about FCoE is that there was so much confusion and FUD around its introduction in 2008 that most people think it “failed” because it didn’t “kill” FC (which is rather silly, because it is FC). The upside about FCoE is that you can attach to native-FC storage from an Ethernet-only server, and use the Fibre Channel Protocol side-by-side with other Ethernet traffic (including LAN and iSCSI and NFS). That means you can capitalize on converging your networks onto one where it makes sense, like from a host to the first switch.

The downside to iSCSI is that it’s difficult to manage and maintain performance and managability after a certain number of devices are attached to the network. Oversubscription can get unwieldly, as it’s managed “outside in” – that is, you have to touch every device in order to manage them. For very large (thousands of nodes) systems this gets problematic when trying to maintain performance levels. The upside to iSCSI is that it only requires a software initiator and an IP address to get started. No extra network fiddling required.

The downside to NAS systems is that historically they have not performed nearly as well (which is fine if you’re just looking for a repository, but not so much if you’re looking for guaranteed performance levels). They also have had a historically difficult time with cross-platform compatibility. The upside is that there is considerable advantages to sharing, clustering, and copying data, uses standard Ethernet components, and advances in the standards have improved many of the performance issues of legacy versions.

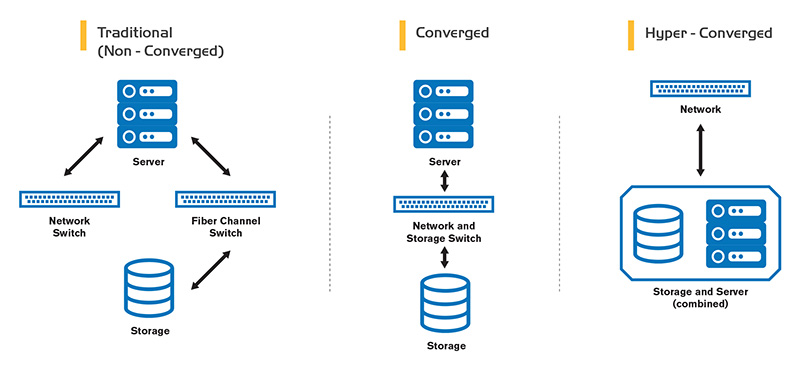

It might be useful to note that many of the modern systems available today break the “compute-network-storage” data center model, opting for more “hyperconverged” methods with compute/storage co-located in the same environment, and use an IP-based approach (either iSCSI, NFS or some proprietary file storage protocol) for inter-communication between nodes. However, those systems also have scale limitations (FC networks can be as large as 20k nodes, hyperconverged “sweet spot” is 8–16).

Which To Use?

Obviously, any vendor who has a product to sell is going to say that their solution is the best, and that the others are going to suck. 🙂

There is no substitute for understanding the holistic impact of the storage environment. Many server administrators think of storage data as “an I/O problem,” but as you can see there is far more involved in making the determination of which to use than simple I/O metrics.

Generally speaking, however, technological advances have improved so much in the past 5 years that most smaller environments (sub-200 nodes, at least) are going to be very happy with modern IP-based solutions (either block or file).

More Information

I recommend looking at a few places for vendor-neutral (as much as possible) information on how this stuff works. SNIA Ethernet Storage Forum is a very good source for understanding some of the basics.

Here are a few that I recommend for getting some of the basics under your belt:

- SNIA. Life of a Storage Packet (Walk) (Webinar) (PDF)

- Erik Smith. FC and FCoE versus iSCSI – “Network-centric” versus “End-Node-centric” provisioning.

- SNIA. Evolution of iSCSI (Webinar) (Q&A)

- SNIA. What is NFS: A Brief Introduction (Webinar)

- J Metz. NVMe Program of Study.

It’s also very helpful to understand how this performance is measured, because it goes to the core of the question of which to choose:

SNIA Storage Performance Benchmarking:

- Part 1: Introduction and Fundamentals (Webinar)

- Part 2: Solution Under Test (Webinar) (Q&A)

- Part 3: Block Components (Webinar) (Q&A)

- Part 4: File Components (Webinar) (Q&A not posted as of this writing)

When I can find time, I’m intending to ending to create a Program of Study article for Storage basics. If you happen to come across a good “storage basics” article (vendor neutral, please!) that should be added, please let me know!

P.S.

I’ve recently created a Patreon account. If you want to see more posts like this – about Synology or anything else to do with storage – please consider sponsoring future content.

Or, if you simply want to show your appreciation for helping you with this problem, you can do a one-off donation. All moneys go towards creating content. 🙂

[simple-payment id=”7192″]

Comments

Pingback: Storage Basics: When to use SAN v. NAS - Gestalt IT

Pingback: Gestalt Storage News 16.1 - Gestalt IT

Great post J.

Got a question on your statement about SANs: “the disk space is reserved for that host on a 1:1 basis – no sharing allowed”

Doesnt sharing happen in the case of a clustered file systems running on servers (Ex: VMFS) that are mounting LUNs off a SAN?

The clustered file system takes a SCSI level lock on the LUN when accessing it to maintain data consistency.

Hi Harsha,

Clustered file systems are, indeed, a very different animal and deserve their own, dedicated post (to be sure). I’ve put in a question to a VMW friend who can hopefully address the specifics about VMFS (I’m not an expert on it), but your question actually contains the answer inside.

SCSI – as a bus – does not allow for the sharing of blocks stored on media. Clustered file systems deliberately break the SCSI model in order to get sharing enabled. In other words, because the disk is reserved on a 1:1 basis, if you want to share it you’ll have to break that model. Clustered file systems do this by inserting an abstracted metadata layer to control the SCSI operations (as opposed to the host), so that the host thinks it still has ownership of a target drive, when in fact there’s a go-between metadata server (MDS) that manages when and how media is written to.

I know that different models exist for various clustering systems, but in all of them there is that additional abstraction layer that intervenes in the host/target write operations.

Thanks for replying J.

But still aren’t the nodes part of the clustered file system mounting a SAN LUN sharing data?

How prudent is it to claim “No sharing allowed in a SAN”?

SAN as a technology doesnt prohibit sharing. If the applications using the SAN want to share data they can always do it.

Hi Harsha, I think we’re starting to get into the weeds here. Essentially there is nothing inside of a SAN that allows for sharing. In of itself SAN software does not allow for sharing, which is why you need to have additional software to accomplish the task. If you want to have those additional software features, you need to add an additional capabilities that the SAN cannot provide on its own, just like any other solution.

By themselves SANs do not allow for sharing, so if you want to add that feature, you have to add the software to the SAN to get the feature.

Good informations…thanks

Thanks for reading!

Pingback: Quora Answer: How to Choose A Storage Vendor? – J Metz's Blog

Hello Harsha,

Say if a fresh college passed out wants to get into the storage field, then what would be the basics that one has to go through?