So many fabrics!

There is quite a bit of hype right now about NVMe and its corresponding sister standard, NVMe over Fabrics (NVMe-oF). Along with hype comes a bit of confusion as well, though, so I have found myself talking about a number of the different Fabrics (as NVMe-oF is short-cutted), where they fit, where they might not fit, and even how they work.

I’ve decided that, whenever I can scrape together the time, to discuss some of the technologies about NVMe over Fabrics, as well as some alternatives that are emerging on the market that are not standards-based. This does not mean they aren’t good, or that they don’t work: it just means that they are a different way of solving the problem that the NVM Express group has laid out.

The first technology I’m going to talk about is Fibre Channel (FC). Quite frankly, there has been very little discussed about NVMe and FC when it comes to Fabrics, and I think it might be a good idea to talk a bit about some of the reasons why FC may make a good choice for some users of NVMe-oF.

Background of NVMe-oF

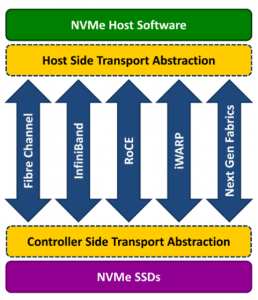

Source: nvmexpress.org

If you’re not familiar with NVMe, I recommend that you start with a decent program of study to learn about what it is. I’m making the assumption that you have already had some exposure to NVMe, if not NVMe-oF at this point (but in case you haven’t, I’ve put together a bibliography for you).

NVMe over Fabrics is a way of extending access to non-volatile memory (NVM) devices, using the NVM Express (NVMe) protocol, to remote storage subsystems. That is, if you want to connect to these storage devices over a network, NVMe-oF is the standard way to do it.

As I’ve discussed before, the standard is completely network-agnostic. If you have a storage network, whether it be InfiniBand, Ethernet, or Fibre Channel, there are ways of transporting NVMe commands across.

At the moment, the hottest topics are the versions that can be sent over Ethernet, because of the technology’s ubiquity and general flexibility in deployment options. RDMA-based technologies, such as RoCE, iWARP, and iSER are based upon InfiniBand-like behaviors working on Ethernet transportation, and dominating the discourse on Fabrics at the moment. I’ll be addressing those in turn, in later posts.

Fibre Channel and NVMe-oF (Geek Alert)

Like Ethernet, Fibre Channel has a layered model of its stack. At the upper layer, there is an entity called the FCP (Fibre Channel Protocl – how recursive!).

The Fibre Channel Stack

FCP is used to connect to even higher upper-layer protocols, such as SCSI and FICON. As it’s written now, Fibre Channel does not have a RDMA protocol, so FCP is used instead. What this means is that FCP provides a way of creating a “connection”, or association between participating ports, so that NVMe devices are treated as, well, NVMe devices (instead of mimicking or “mapping” them).

In other words, just like Fibre Channel creates direct links between the SCSI protocol at the FCP layer between end devices, it does the exact same thing for the NVMe layer between end devices.

The Fibre Channel Advantage

There are three key pieces to the puzzle that go beyond just the protocol, however, that give FC a distinct advantage to users at the moment who may be trying to determine which fabric is appealing.

1) Fibre Channel is a dedicated storage solution

This may seem trivial and obvious, but it actually makes a big difference.

NVMe is, for all intents and purposes, a “new” storage protocol technology. It works quite well, but there are enough nuances to change the nature of the way systems are architected and designed. RDMA-based protocols are not “natively” designed specifically for storage – on the contrary, they were designed for inter-process communication (IPC) between compute nodes with direct memory access (that’s the DMA part of RDMA – the “R” stands for “Remote”).

NVMe is, for all intents and purposes, a “new” storage protocol technology. It works quite well, but there are enough nuances to change the nature of the way systems are architected and designed. RDMA-based protocols are not “natively” designed specifically for storage – on the contrary, they were designed for inter-process communication (IPC) between compute nodes with direct memory access (that’s the DMA part of RDMA – the “R” stands for “Remote”).

So, while RDMA-based solutions are extremely useful and show promise, they simply don’t have the track record for reliability and availability for storage. In fact, one of the big debates going on at the moment is which RDMA-based protocol is the most intuitive to deploy.

Fibre Channel, on the other hand, is a well-understood quantity. There is a reason why 80+% of Flash storage systems use Fibre Channel as the protocol of choice – it handles the demands of that kind of traffic with remarkable adeptness.

In other words, for customers who are trying to minimize the moving parts of a new storage paradigm, they may find that keeping the change down to one variable, that of NVMe-oF, to be something of a comfort.

2) FIbre Channel has a robust discovery and Name Service

When you put devices onto a network that need to communicate with other devices, there has to be a way to find one another.

Source: Designing Storage Area Networks: A Practical Reference for Implementing Fibre Channel and IP SANs (2nd Edition), by Tom Clark

As Erik Smith pointed out (better than I could have done), Fibre Channel is a “network-centric” solution. The network controls what each end-device will be connected to. That is, the nature of the FC fabric is such that it knows every device that is connected. This makes the onerous job of discovering and controlling’managing access to devices much easier.

This feature cannot be understated. By comparison, iSCSI’s name service – Internet Storage Name Service (iSNS) – has often been criticized for performance, reliability, and responsiveness in real-world deployments. In fact, for many iSCSI deployments a iSNS simply isn’t used – end devices are manually configured to find their corresponding storage. Even so, it is a brilliant system, but you would be hard-pressed to find someone who would argue that the available versions are as good as, or reliable as, the FC Name Service.

Why bring up iSNS? Because the NVMe version of the Name Service is based heavily on the iSNS model. (Note: I am not dismissing or denigrating either iSNS or the NVMe name service. I’m simply saying that the Fibre Channel name server is practically bullet-proof by comparison).

Every Fibre Channel device – from any device manufacturer – will use this method of discovery and fabric registration. That kind of guarantee simply cannot be made for other, Ethernet-based systems.

3) Qualification and Support

I’ve saved the most important reason for last.

Unlike Ethernet-based devices (I’m deliberately leaving out InfiniBand, because there is really only one manufacturer for IB devices, realistically), Fibre Channel solutions are qualified end-to-end, from Operating System to Array.

Manufacturers of FC devices undergo very painful, very expensive qualification and testing procedures to make sure that when a FC solution is implemented, customers will not have to struggle to find out – painfully and on their own – that driver mismatch and hardware incompatibilities have meant they’ve made a very expensive mistake.

Manufacturers of FC devices undergo very painful, very expensive qualification and testing procedures to make sure that when a FC solution is implemented, customers will not have to struggle to find out – painfully and on their own – that driver mismatch and hardware incompatibilities have meant they’ve made a very expensive mistake.

It will be a long, long time before such an end-to-end qualification matrix will exist for Ethernet-based systems – if ever. There are simply too many various end-device manufacturers for each of the different configuration possibilities to be tested. Sure, plug-fests help (and they do!) but the cost of the burden is prohibitive with Ethernet solutions – at least to the level and degree that it exists for Fibre Channel.

This becomes extremely important as we start to understand new NVMe-oF drivers, hardware, discovery protocols, and RDMA implementations. Add to that the challenge of guaranteeing network QoS and end-to-end integrity, and there are going to be several missteps before things get ironed out industry-wide.

Without question, Fibre Channel has the lead in this area, hands-down.

Conclusion

This is the first of hopefully, several pieces on the different fabric solutions that can apply to NVMe over Fabrics. I hope to be able to get to others – RoCE, iWARP, iSER, InfiniBand, as well as other non-standard approaches – soon.

My intent here is not to say that one technology (i.e., Fibre Channel) is better than other forms, just that there are distinct advantages that – as of this writing – appear to give FC something worthwhile to look at. (RDMA-based protocols have distinct advantages as well, but one thing at a time).

As always, your mileage will vary. All I’m hoping for is to provide a means for people to think about the new technology in a way they may not have thought of before.

Stay tuned.

[Update: The FCIA is presenting a webinar on FC-NVMe on February 16. Register for the event, even if you can’t attend live, so that you can watch it at your leisure.]P.S.

I’ve recently created a Patreon account. If you want to see more posts like this – about Synology or anything else to do with storage – please consider sponsoring future content.

Or, if you simply want to show your appreciation for helping you with this problem, you can do a one-off donation. All moneys go towards creating content. 🙂

[simple-payment id=”7192″]

Comments

Unfortunately FC is way to slow for NVMe, even 128G FC. 400Gb Ethernet has been used with NVMe to demonstrate performance (By Microsoft recently), and that is ok, but still not fast enough for Optane (3D Xpoint). So we are going to see a lot of disruption in networking to support flash over the next couple of years. Not sure how many 32G FC deployments there are, but it appears that FC is not keeping up with flash. Even the PCIe bus is too slow at the moment for some of the new flash tech. So many things will need to change to really leverage the capability of modern and ever advancing flash technology.

Hi Michael, thanks for stopping by.

You are confusing throughput with latency. When you are talking “fast enough,” you are talking about the ability for a network to maintain the low-latency aspects required by the media devices to capitalize on the efficiencies of non-volatile media. To that end, the additional latency added to NVMe by FC (over PCIe) is more than adequate. In fact, it’s damn impressive. FCIA demonstrated extremely low-latency FC-NVMe at the Flash Memory Summit.

I’m not going to do a comparison between FC and Ethernet-based systems here. That was not the point; I will be talking about the advantages of other Fabrics technologies in other posts. You are, however, forgetting about how FC is often deployed – with link aggregation (portchanneling) techniques. Using SRC/DST/OXID load balancing we can take 16 individual 32GFC links and aggregate to more than 400Gb (something close to 450 actual Gbps throughput, if I recall correctly).

Again, the point here is to explain why people might find FC attractive. In other posts I’ll discuss why people may find alternatives attractive.

Thanks once more for adding your thoughts.

RDMA is quite proven for storage, how do you think Azure storage scale, or Oracle Exadata or Teradata.. And most of the storage products from EMC XtremIO, isilon, symm, many of the all flash, and not to mention storage backbone for most of the large supercomputers in the world

And you can use the same 25-100g wire for network, ipc, and storage, with strong low level isolation vs having a low perf single purpose fabric

In general im not a fan of NVMeoF for other reasons, the use of Block/SAN is quite limiting and expensive compared to scale-out DAS based hci/object/file/databases, dont see a single cloud provider basing their storage connectivity on block protocols

Yaron

Yaron,

Thanks for the comments.

You are correct; back-end storage systems have been using InfiniBand for years. What I was referring to was enterprise environments where the host does not have an RDMA initiator, but rather must be managed (often by a separate team). One does not provision back-end storage in the same way that will need to be done for hosts/servers. To that end, RDMA-based protocols do not have the maturity that FC-based systems do.

As was shown at the Flash Memory Summit, there are many questions that people have about how RDMA and NVMe intersect, and in which flavors. Most people do not understand the concept of verbs – yet. It simply isn’t as well-understood in the marketplace as traditional Fibre Channel.

As I’ve said repeatedly, i’m a huge fan of using the right tool for the job. There are more aspects to running enterprise-class storage in a data center than the number of IOPS you can get out of an adapter, and this article was intended to discuss some of those aspects (as they relate to Fibre Channel) that people may not have thought about.

Thanks again for commenting.

J

Hi J

Great post! So much as we see the benefits of Ethernet/IP based storage protocols, the customers continue to love FC.

I would like to debate the Qualification and Support benefit with FC (point number 3). In IP/Ethernet world, we never ask for such qualifications. A client machine initiates a request (may be TCP session), gets routed/switches via network, reaches server to start the end-to-end communication. The IETF standardization has been extremely well and customers never think of qualification because it just works and expected to be supported from the vendor who deviates from standards. Similar behavior is needed in FC world as well where customers just connect and things work. This would bring down the cost and complexity. I am sure there are valid reasons why this never happened or will happen in FC. But comparing it to IP/Ethernet world, it looks like as if things are not very standardized in FC and to plug that gap, we need vendors to qualify.

Thanks

Paresh

…my personal views….

Hi Paresh,

Good points. I’d like to try to make sure I capture your thoughts correctly. (don’t worry about them being “personal views” – that’s what all of this is 😉

When we talk about storage systems, it is not quite accurate (IMO) to think that Ethernet solutions “just work.” There are different TCP/IP stacks, different types of Layer 4 configurations. different types of networking impacts. The VMware knowledge-base is chock full of best practices and recommended behaviors, not to mention caveats, for different IP storage targets.

You say, for example, “similar behavior is needed in FC world as well where customers just connect and things work.” This is, in fact, exactly the point of the extensive qualification process. Customers have been comfortable deploying FC systems because of the fact that they know precisely what OS versions, which adapters, which switches, and which targets to deploy – because it “just works.”

“But comparing it to IP/Ethernet world, it looks like as if things are not very standardized in FC and to plug that gap, we need vendors to qualify.”

I’m afraid I couldn’t disagree more with this statement. It is precisely the vendor qualification for FC that is superior here. I believe that you will be hard-pressed to show a qualification that does not exist sponsored by FC storage vendors that extends all the way back to the host OS.

Thanks again for writing!

J IMHO what is missing from this discussion is a picture of a blender where different ULPs (e.g. SCSI, NVMe, IP, proprietary, AV, VI, etc) get tossed in, along with a mix of some different transport/networks (FC, PCIe, GbE, IBA [non converged/non GbE mode], RapidIO, etc) combined with mix of copper and optical cables (localized to your converged connection needs), then toss in higher level block (or block like) for deterministic behavior, or file, or various object, API and programmatic bindings as well as streams, then add plenty of your preferred adult beverage or supplied cool aid, if you blender has one, set the Aggregated, Disaggregated or Hybrid mode, then press the CI, HCI, CiB, SDS, Classic or Pulse button until desired consistency reached, then press STOP, press Pulse as needed. Serve in your favorite logo’d glass and enjoy along with a scale out serving of popcorn in an NFR bowl obtained from a recent conference or event ;)…

Serious, it seems like things are getting muddled together instead of separating out upper and lower levels along with usage. Not everything needs block, file or object, likewise somethings need block, file and object. Some things lend themselves to localized DAS (dedicated or shared) while others can be adapted, others have different needs. Everything is not the same, RDMA is good and been around for what 10-15+ years, however it has been newer upper level software (higher up in altitude) stacks that add management and other features to make it viable. When it comes to FC, there is the lower level, and upper levels, perhaps the conversation should be on SCSI_FCP (aka FCP) aka the protocol or what many simply refer to as FC vs. NVMe (e.g. the protocol). Likewise for DAS, then it’s a conversation of NVMe the protocol over PCIe vs. SAS or SATA/AHCI etc…

Anyways, now im getting hungry for some whirly pop (http://www.whirleypopshop.com/) popcorn and a Frozen Concoction (the other FC), maybe even a mister misty protocol induced headache…

Ok, nuff said, for now…

Cheers gs

Nice perspective and thanks for putting it down for discussion.

One of the reasons people seem to dislike FC is the problems of managing it. NFS made life simple both from accessibility and from managing capacity while sacrificing on high performance and latency. There was no name service other than the good old general purpose DNS. Complex virtualized environments were built on it. Now, if we could bring the simplicity of consuming remote storage (like NFS) but give the latency of RDMA and predictability characteristics of FC then we would have a winner. We see people move to hyper convergence with the promise of agility and buying into the weaker promise of low latency. Bottom line, give me simplicity. I would like to mount the remote storage onto a local path and be done with it. Forget the discovery, forget the name service and keep life simple. As much as we all keep harping over the fact that security is everything, no security is bullet proof and the best security is pure isolation. People still use NFS v3 for a lot of the virtualized infrastructure. Netapp made a fortune on this. So how is my life going to be easier by doing NVMe over FC? How is NVMe going to make life easier when compared to iSCSI or FC. If doing NVMe over FC is going to drag in all the complexity of managing a SAN – then why is this the general purpose future. I see it as a niche. A disruptive technology that gives all (4) things i.e. performance, business continuity, cost and agility is the winner not just doing one or two of the 4 things. I am watching some of the standards activity around NVMe and all I can do is shake my head. In My opinion, folks are looking at RDMA (ethernet) + NVMe more at a rack scale or pod like architecture. The more I talk to large enterprises, the more they want to move to a rack scale architecture the more they also want to get away from centralized SAN model where a dedicated organization manages it. I am not saying that it is going to happen now, but time will tell and the movement has momentum and the wheels are in motion.

My 2 cents worth

VR Satish

Hi VR Satish,

You raise a lot of very good points. I’m behind on my series, but I will be getting to the RDMA aspects of things as soon as I can (unfortunately, RoCE and iWARP are starting to become something of a religious battle now).

There is, as you say, a trade-off (see my blog on storage tradeoffs, for instance. There are those who want the deterministic side of the storage network to be predictable at larger scale and oversubscription ratios than NFS typically provides. Many solutions are looking to take, as Greg points out, a different means of “higher level” management on top of the lower-level performance deployments. That is to say, the server-side or rack-scale deployments which are very fast and easy to manage, coupled with a more-significant penalty for workloads that need to traverse those boundaries in exchange for greater management freedom.

It is, of course, all relative to what customers currently have in their data centers today. 🙂

This article is not to say that this is the only way to solve the storage problem, but rather one way that has some advantages for certain people who fall into this specific category. How “niche” it will become is also relative, however, as we’re still talking a lot of networking ports necessary. It may very well be that the “niche” situation is still plenty robust enough to sustain a healthy solution.

In any case, thanks for sharing. 🙂

Pingback: A NVMe Bibliography – J Metz's Blog

Pingback: Storage Basics: When to use SAN v. NAS – J Metz's Blog