Excellent question! The answer depends on how deep in the weeds you wish to go. 🙂

All storage devices are far more complicated than many people realize. NAND devices (including SSDs, USB thumb drives, etc.) are all susceptible to failure, which goes against storage’s One Job™: Give me back the correct bit I asked you to hold on to for me.

A couple of definitions of terms, first and foremost:

SSD: Stands for solid state drives (or disks), and doesn’t require any moving parts. SSD is more of a form factor than a memory technology, but the terms are often (incorrectly) used interchangeably.

Flash: Is a type of memory that doesn’t require power. That is, you do not lose data when power is turned off. DRAM, sRAM, etc. are volatile forms of memory (they lose the data when the power is turned off), so those types of memory are not considered Flash.

NAND: is the actual non-volatile memory type that is commonly used in Flash devices, which happen to be constructed into the SSD form factor. It’s also used in your computer’s BIOS chip, CompactFlash (found in digital cameras, USB sticks, memory cards for video game consoles, etc.

Save The Bit!

Now, we all know that Flash technologies are very fast. In fact, they are so fast, why would we use anything else?

The problem is that NAND technologies are not perfect. In fact, like anything else, there are trade-offs and when answer a question like this one, knowing what those trade-offs are make a huge impact on where things can go wrong.

For one thing, it’s important to know that NAND technology is actually pretty delicate. In fact, it’s more delicate than the media used for spinning disk. Among the things to keep in mind are erasure, usage, and retention of data.

Let’s look at each in turn.

You may have heard of Single Layer Cell (SLC), Multi-Layer Cell (MLC), Triple Layer Cell (TLC), or Quad-Layer Cell (QLC) technologies. These have to do with how many bits you can stuff into a cell (1, 2, 3, and 4, respectively). The NAND technology used in early Flash storage appliances (like Violin Memory, for example) were SLC. Many of them still are, in fact, though they are far less popular nowadays due to their cost.

At the risk of oversimplifying, the more bits-per-cells you have, the cheaper the device will be, but also the slower, the more fragile, and the more prone to failure the device will be. That’s why the compromise in many different types of SSDs for Data Centers and consumer-based electronics has been a MLC-type or modified TLC-type. More on these modifications in a second.

These different types have different erase counts. What’s an erase count?

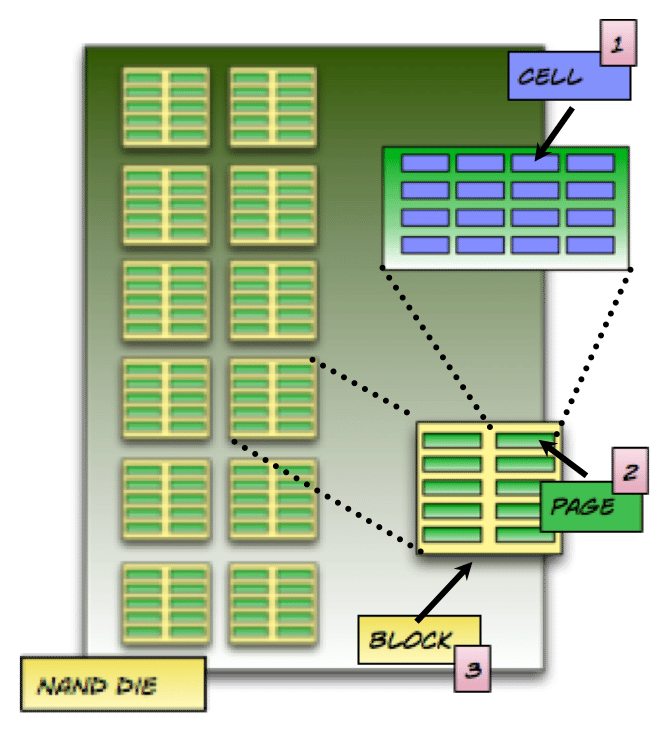

Look at this image:

(Okay, that came up bigger than I expected. Oops!)

The Cell (#1) is what we were just talking about. You group these cells into pages (#2), which are the smallest unit that can be programmed (written to). These pages are grouped into blocks (#3). Blocks typically have 128 or 256 pages. Blocks are the smallest unit that can be erased.

Aha! I hear you saying. I can write to a page, but I have to erase a whole block? Yes, yes you do.

“Well, what if I want to erase something that I wrote to a page, but there are other pages in the block that I don’t want to erase?” Yes, we’re getting to the tricky part. You have to move the data to a new place. This process of moving data around on a disk in order to “clean up” areas is called Garbage Collection.

And it happens all the time.

Worse, this has to be done uniformly across the device. In order to maintain some semblance of predictability of wear-and-tearm, the entire device needs to be written to, and erased, at the same rate across all the blocks/pages in the Flash device.

Even if your program/application doesn’t need to move data, the NAND device needs to do so. In fact, your data doesn’t simply “sit” and wait to get used; NAND materials must be refreshed on an ongoing basis, and as a result data is moved whether you want it to or not every 90, 60, or 30 days, or fewer!

Risk vs. Reward

Every time you move data around, whether it be over a network or on the same storage device, you run a risk of losing data. That means that there have to be checks and re-checks to ensure that the data is not corrupted during a move.

Every Flash device has what’s called a Flash Translation Layer (FTL). This is the really important piece of the puzzle to ensure that you don’t lose your data. If this gets hosed, you’re screwed. It’s the part of the device that knows where everything is, where everything is being moved to, and which parts are ready to be overwritten.

Obviously, the device does everything in its power to protect the FTL. Depending on the device, what that means can be extremely robust (redundant memory buffers), or extremely weak (as in the case of USB sticks, which are supposed to be disposable).

Different devices also have varying degrees of Caches and Buffers. These are temporary holding locations for higher speed storage. (For the true geeks, the main difference between cache and buffers is that a cache is indexed, and can service READ requests, while a Buffer is not indexed, and cannot service READ requests).

One of the big issues that can happen and possibly corrupt the system is if there is a power disruption/fluctuation when you are trying to write to the device. A sudden power loss, for example, can cause the loss of host data in the volatile write buffer – as well as possibly corrupt data on the SSD.

So, you need to prepare and manage this. Well, the device does, anyway.

There are various stages of writing to a device, and the device will acknowledge back to the host (such as the application or operating system doing the writing) where it is in the process.

Even on PCIe-based systems, the host and the storage device act as separate entities (because, well, they are different, physically separate entities). When you have an application that needs to write data to a device, it needs to move it from volatile memory (e.g., RAM) into a buffer on the SSD (called a write buffer). When the SSD receives the data, it needs to acknowledge back to the host that the data has been received. At this point, it’s up to the host not to release the data from volatile memory until it gets this acknowledgement).

This back-and-forth is constant. “Do you have it?” “No, not yet.” “How about now?” “Yes, I’ve got it.”

Difference between devices

Now, at this stage, when the SSD has the data, it will tell the host that it’s got the information. This is where different classes of SSDs/USB sticks, etc. handle things differently.

Enterprise SSDs (the kind of SSDs that exist in data centers) protect this data in the main buffer. Remember, this buffer is volatile, which means that at this point if there is a sudden power loss, it’s gone.

Let me say that a slightly different way. The host thinks that the SSD has got it, the SSD has said that it has it. The host dumps the data from its main memory and thinks that it’s safe. Then there’s a power outage.

The write buffer on the SSD – which is volatile – is suddenly empty. But it hasn’t written to the Flash yet. Hilarity ensues.

As I said, enterprise-class SSDs have a protection mechanism for this buffer, but other types of Flash devices (including the consumer SSDs you buy from your local electronics store, USB sticks, etc.) do not.

In fact, “consumer” SSDs do not protect your data at all until it’s at the final resting stage. The data is not protected during the transmission from the host, even after it’s acknowledged back to the host, or when it’s migrated from the write buffer back to the NAND pages. (see how all this stuff comes together? And here you thought the conversation about pages and blocks was superfluous.)

Enterprise-grade SSDs, on the other hand, protect the data at every stage along the way (except for the initial transmission from the host – no device can protect data it hasn’t received yet).

Additional Protection

There is something called Data Path Protection, which is intended to protect data from bit errors across data transmission. Assuming you aren’t overwhelmed with what I’ve written, there is a great PDF (direct link) that Micron put out about the differences between consumer and enterprise SSDs.

Also, there is something called Asynchronous Power Loss Protection, which has the goal of protecting data-at-rest during a power loss event. As you can imagine, there are differences between consumer and enterprise-grade SSDs.

As you probably have already surmised, this extra protection explains the cost differences between enterprise-grade and consumer-grade SSDs. Flash devices that are designed to be disposable, such as camera SD cards, USB sticks, etc., don’t have many, if any of these additional protections. The more protection, the more costly.

These are, of course, data protection mechanisms for the device. We expect that the device will eventually succumb to failure (not if, but when), so that’s why we use RAID systems, Erasure Coding, and other systematic approaches for data protection, in addition to good backup and recovery mechanisms.

This is probably more than you were expecting, but hopefully it was consumable and approachable enough to get the answers you were looking for (and then some).

If you’re interested in finding out more information about storage, storage networking, or data center technology, there is an extensive library of content I’ve organized for you – from the absolute beginner to the hardcore admin. Feedback is always greatly appreciated.

[simple-payment id=”7192″]

Comments

Thank you very much for the thorough explanation.

Thanks for reading and leaving a comment. 🙂

Pingback: Storage: HDD and SSD Partitioning and I/O Operations – J Metz's Blog

Pingback: Quora Question: Is It Worth Upgrading An SSD From TLC to QLC? – J Metz's Blog

Pingback: Flash Drives with Fake Capacity – J Metz's Blog

Pingback: From Quora: What is better? 512GB SDD, 1TB HDD + 256GB SSD, or 512GB SSD + 32GB Optane? – J Metz's Blog

Hi

I am a simple over fifties author with who requires a lot of storage for manuscripts what type of computer do I need.

Hi there, Esse.

My recommendation is that you find a computer that you can afford, and an external USB hard drive for backup. Manuscripts in general don’t take up a lot of room (relatively), so you should be safe with pretty much anything you choose.

Good luck!